Experience the future of

Fraud Detection

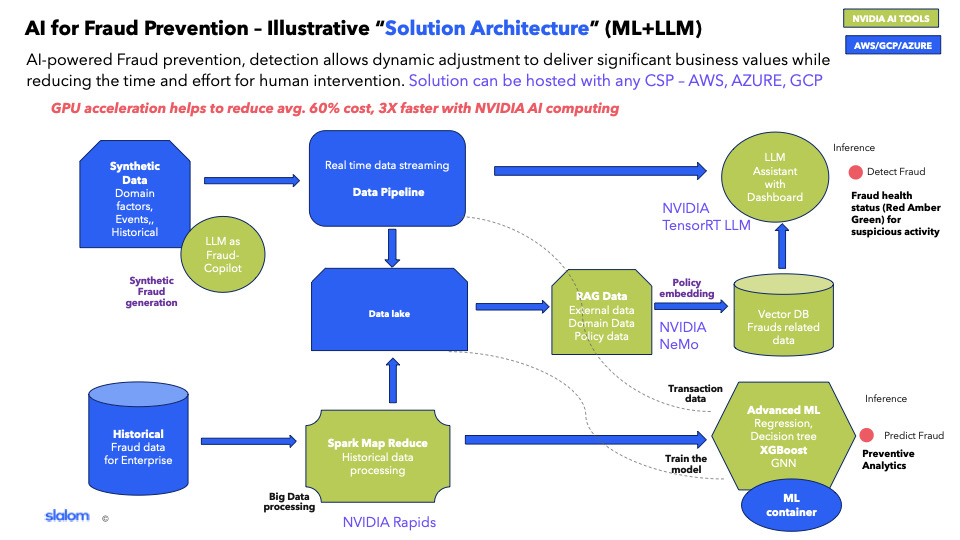

In an era where financial fraud is increasingly sophisticated, your financial services process deserves an equally advanced defense system. Welcome to FriskAI, the pinnacle of innovative technology, combining SOTA machine learning algorithms and large language models (LLMs) to safeguard your financial operations to safeguard your financial operations.

Get StartedKey features

Everything you need to identify fraud.

Technology

State of the Art

Machine learning algorithms (ML) harness the power of relationships and connections within your data to detect fraudulent patterns. Large Language Models (LLM) provide the latest in natural language processing to understand and analyze textual data.

Integrations

Continuous Learning and Improvement

Our system continuously evolves with each interaction and new data input, ensuring that our models stay ahead of emerging fraud tactics

Responsible AI

Guardrails for Safety

Stringent guardrails ensure ethical usage that adheres to the highest standards of data privacy and security to safeguard sensitive information.

Explainability

Conversational chatbot

Engage directly with our AI to understand the reasoning behind fraud detection decisions. Get clear, concise explanations on why an application is flagged, enhancing trust and transparency

Differentiated History

Our Unique Approach

Frisk AI was trained on publicly available data sets (checking transactions and loan data comprising over 960,000 transactions.

- Real-Time Detection

Instantly identify and respond to fraudulent applications, minimizing risk and potential loss.

- User-Friendly Interface

Intuitive design makes it easy for users to navigate and understand the system's functionalities

Fiercely Human Approach

Building AI Responsibly

The rapid expansion of generative AI offers incredible innovation opportunities, yet it also presents new challenges. We are dedicated to developing AI responsibly by focusing on a people-centric approach that emphasizes education, science, and customer needs. This commitment ensures responsible AI integration throughout the entire AI lifecycle.

- Fairness

Assessing the impact on diverse stakeholder groups

- Explainability

Understanding and evaluating system outputs

- Privacy and Security

Assessing the impact on diverse stakeholder groups

- Safety

Preventing harmful system outputs and misuse

- Controllability

Implementing mechanisms to monitor and guide AI system behavior

- Veracity and Robustness

Ensuring accurate system outputs, even in the face of unexpected or adversarial inputs

- Governance

Empowering stakeholders to make informed decisions about their interactions with AI systems

- Transparency

Applying best practices throughout the AI supply chain, including providers and deployers

Our Perspective

Guardrails for Responsible AI

- Custom-Tailored Guardrails

Implementing foundation models (FMs) to enforce responsible AI policies with tailored safeguards.

- Consistent AI Safety Levels

Evaluating user inputs and FM responses based on specific use case policies. Providing an additional layer of protection across all large language models (LLMs) on Amazon Bedrock, including fine-tuned models.

- Blocking Undesirable Topics

Ensuring a relevant and safe user experience by customizing interactions to stay on topic. Detecting and blocking user inputs and FM responses related to restricted topics, such as a banking assistant avoiding investment advice.

- Content Filters

Filtering harmful content according to responsible AI policies. Configurable thresholds help filter out harmful interactions across categories like hate speech, insults, sexual content, violence, misconduct, and prompt attacks. These filters enhance existing FM protections by automatically evaluating both user queries and FM responses to detect and prevent restricted content.

- Denied Topics

Redacting sensitive information (PII) to protect privacy. Detecting sensitive content in user inputs and FM responses, with the option to reject or redact this information based on predefined or custom criteria. For instance, redacting personal information while generating summaries from customer and agent conversation transcripts in a call center.

- Pseudonymisation and Custom Word Filters

Blocking inappropriate content with customizable word filters. Configuring specific words or phrases to detect and block in user interactions with generative AI applications, including profanity and offensive terms, such as competitor names or other sensitive words.